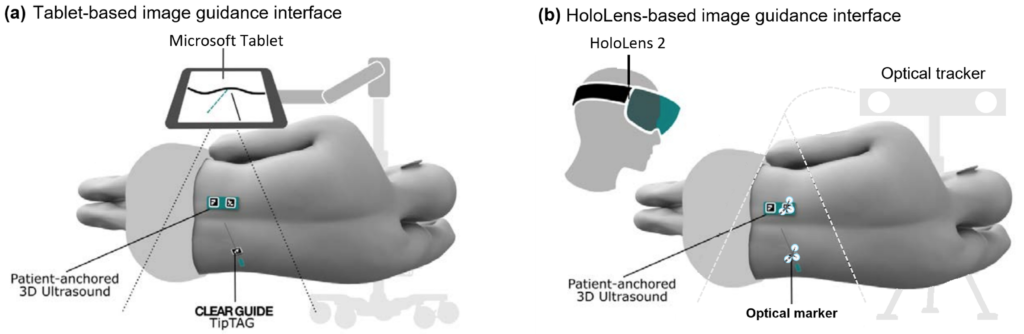

As one of the most commonly performed spinal interventions in routine clinical practice, lumbar punctures are usually done with only hand palpation and trial-and-error. Failures can prolong procedure time and introduce complications such as cerebrospinal fluid leaks and headaches. Therefore, in this work, we present a complete lumbar puncture guidance system with the integration of (1) a wearable mechatronic ultrasound imaging device, (2) volume-reconstruction and bone surface estimation algorithms and (3) two alternative augmented reality user interfaces for needle guidance, including a HoloLens-based and a tablet-based solution.

The integrated system concept

As shown below, we have developed two variants of lumbar puncture guidance system based on the wearable ultrasound scanner. To the best of our knowledge, we are presenting the first completely integrated LP-guidance system with wearable imaging hardware, image processing algorithm and UI components, that provides all of the following advantages:

- Maintains the accurate image-anatomy relationship in the presence of patient movement.

- Hands-free automatic image acquisition.

- Does not require a pre-operative CT scan or use atlasbased registration of spine models.

- Does not require an expensive, bulky industrial robot arm.

- Provides multi-angle imaging of spine anatomy by controlling and measuring the exact geometry of ultrasound scanning motion.

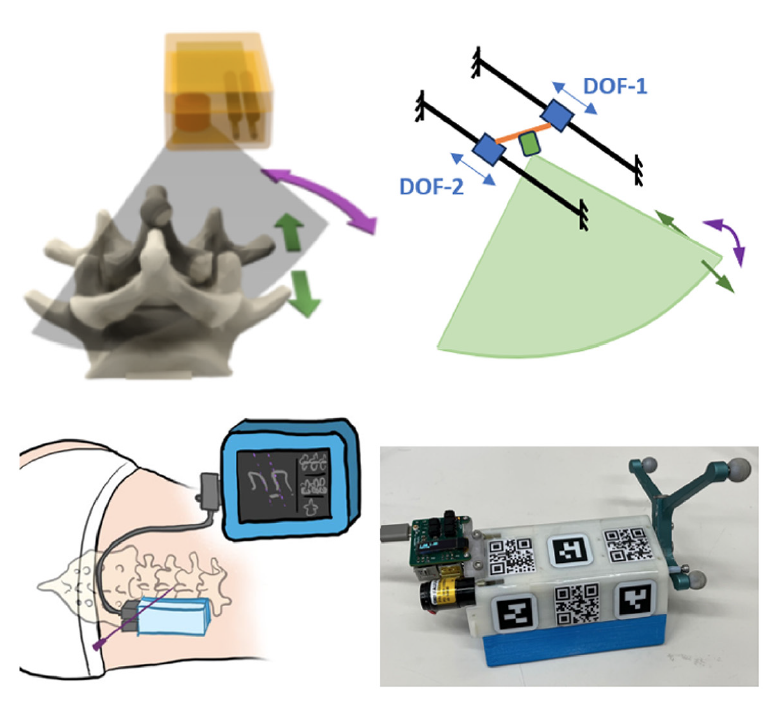

The first component: Wearable ultrasound scanner

We introduced a wearable ultrasound scanner designed for complex-shaped lumbar imaging which has a 2 degree-of-freedom (DOF) rotating and translating phased array transducer that reduces shadows, increases the chance of US beams imaging with ideal incidence angles on bone surfaces and enlarges the field-of-view for the lumbar anatomy. The wearable scanner is designed to be fixed to the patient with bio-compatible adhesive and has a flexible polymer acoustic standoff pad for the bottom part that conforms to the minor unevenness of the patient’s skin. In this study, we used an improved version of the scanner with a similar mechanical design housing a custom

2.75 MHz phased array transducer, with an overall dimension of 110mm(L) × 70mm(W) × 48mm(H) and a weight of 307 grams. The ultrasound images were captured with an Ultrasonix SonixTablet.

To integrate the wearable scanner with the two navigation interfaces, three types of camera markers were attached to the scanner housing, including AprilTags (19 mm × 19 mm),

QR codes (25 mm × 25 mm), and infrared optical markers. The scanner housing is 3D printed with engraved markings so that AprilTags and QR codes can be accurately attached to known locations in the scanner coordinate frame. The AprilTags can be tracked by the Tablet camera, and the QR codes are used by the HoloLens 2.

The second component: image processing software

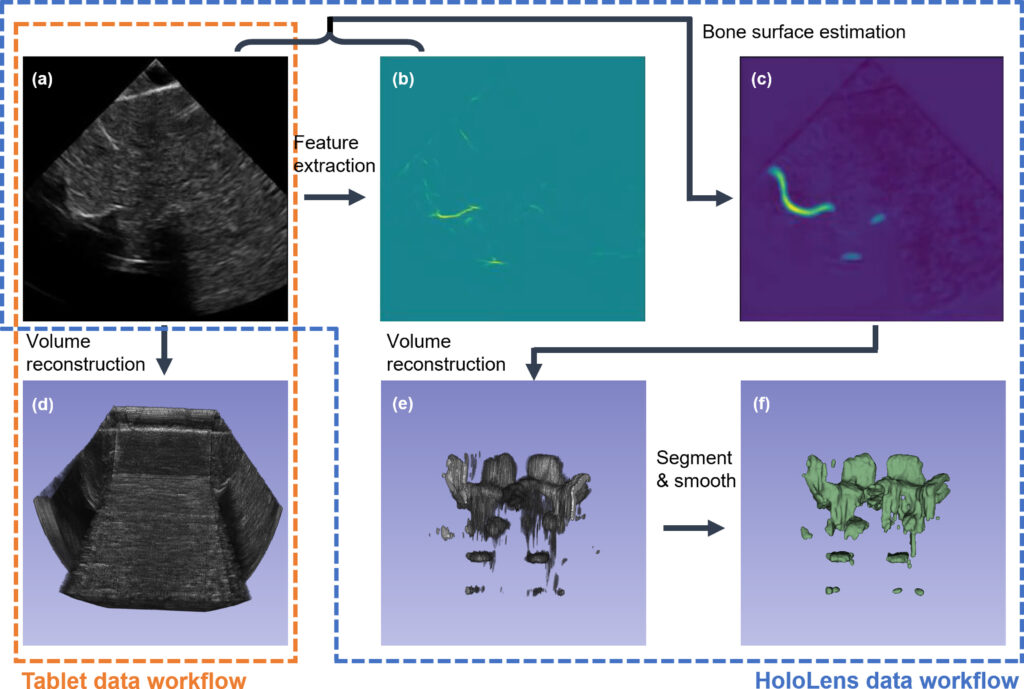

Tablet-Interface Data Workflow: The Tablet-based navigation interface requires a reconstructed 3D ultrasound volume for needle path planning and guidance. During scanning, the spatial transformation for each 2D ultrasound image is recorded by the motor encoders and computed with scanner kinematics. Given the B-mode image data, corresponding spatial transformation, and pixel spacing information, we are able to reconstruct a 3D ultrasound volume with the averaged voxel-nearest neighbor (VNN) method.

HoloLens-Interface Data Workflow: It is less intuitive to present the raw ultrasound data to the user in the HoloLens environment. Instead, we display a hologram of a 3D mesh representation of the spine surfaces generated from the ultrasound image data. We first estimate the bone surface using deep learning models and then reconstruct a bone surface estimation volume using the network output and the corresponding spatial transformation matrices. The reconstructed volume is further processed using automatic binary Otsu thresholding ollowed by volumetric image closing and opening morphological operations. The binary 3D surface volume is then transformed into a mesh model via the Flying Edges algorithm implemented within 3D Slicer for needle path planning and guidance in the AR environment.

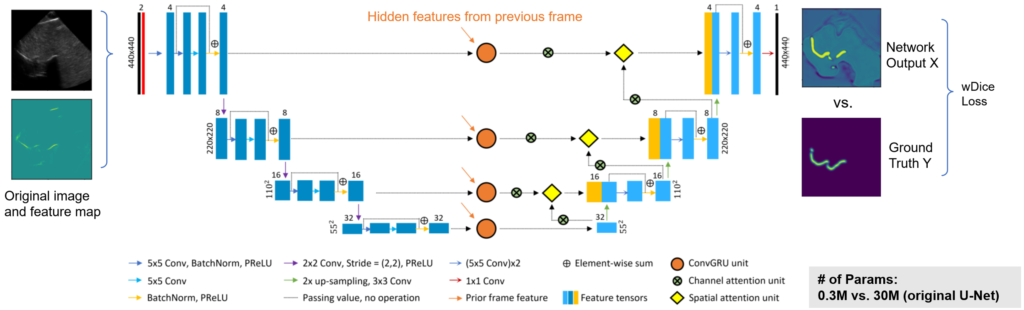

To estimate the bone surface from ultrasound images, we developed a deep learning-based algorithm, where an aggregated feature extractor is applied on the raw 2D ultrasound image to generate a bone feature map, by taking advantage of the local phase information and the shadowing information within the image. Then, both the raw 2D US image and the feature map are fed into a spatiotemporal U-Net to produce a spine surface estimation heatmap. The network is trained using image data captured on a separate spine phantom to demonstrate algorithm generalization during the user study.

The third component: AR user interfaces

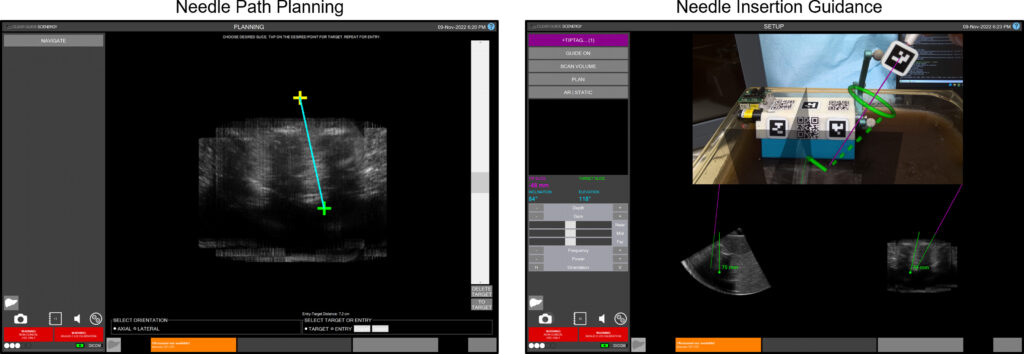

Tablet-based navigation interface (For the image below). Left: Using the path planning interface, an entry point (yellow) and a target point (green) can be selected. Right: During needle insertion, a solid green arrow is drawn from the tracked needle tip to the planned entry point. The green dotted line indicates the target needle angle, and the green torus represents the angular alignment error in the camera depth direction.

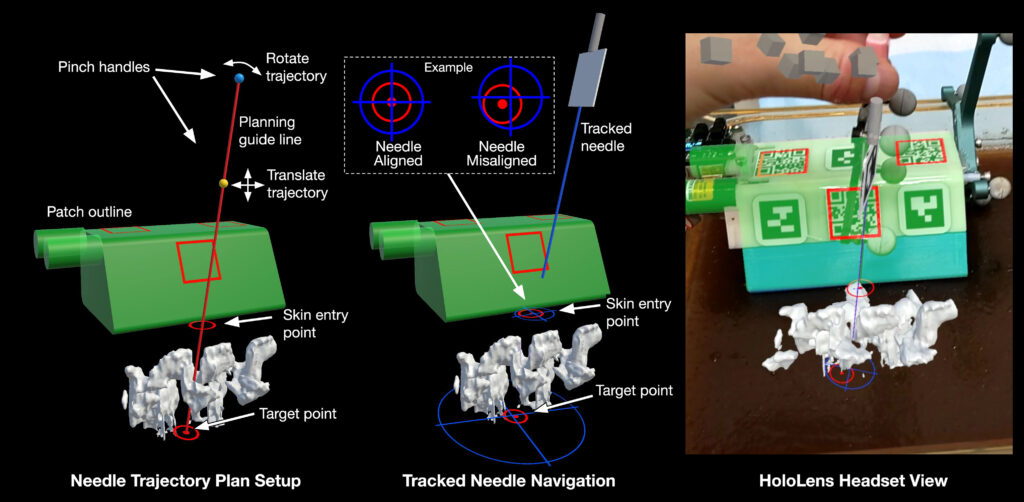

HoloLens-based navigation interface (For the image below). Left: In planning mode, the user selects a planned needle trajectory by positioning the red planning guide line. The HoloLens 2 built-in hand tracking allows the user to pinch and drag the yellow and teal grab handles. The yellow sphere translates the line, while the teal sphere rotates the line around the target point. Center: During needle insertion, the user can follow a trajectory plan by lining up the blue crosshairs (expected path of the tracked needle) with the red circular visual cues (trajectory plan path). Right: The scanner outline (green) and tracked needle position are displayed as holograms to allow the user to verify tracking accuracy, with the same spine model shown in Left.

References

- Xu, K., Jiang, B., Moghekar, A., Kazanzides, P. and Boctor, E., 2022. AutoInFocus, a new paradigm for ultrasound-guided spine intervention: a multi-platform validation study. International Journal of Computer Assisted Radiology and Surgery, 17(5), pp.911-920.

- Jiang, B., Wang, L., Xu, K., Hossbach, M., Demir, A., Rajan, P., Taylor, R.H., Moghekar, A., Foroughi, P., Kazanzides, P. and Boctor, E.M., 2023. Wearable Mechatronic Ultrasound-Integrated AR Navigation System for Lumbar Puncture Guidance. IEEE Transactions on Medical Robotics and Bionics.

- Jiang, B., Xu, K., Moghekar, A., Kazanzides, P. and Boctor, E.M., 2022, October. Insonification Angle-based Ultrasound Volume Reconstruction for Spine Intervention. In 2022 IEEE International Ultrasonics Symposium (IUS) (pp. 1-4). IEEE.

- Jiang, B., Xu, K., Moghekar, A., Kazanzides, P. and Boctor, E., 2023, April. Feature-aggregated spatiotemporal spine surface estimation for wearable patch ultrasound volumetric imaging. In Medical Imaging 2023: Ultrasonic Imaging and Tomography (Vol. 12470, pp. 111-117). SPIE.